interpretability

view markdownsome interesting papers on interpretable machine learning, largely organized based on this interpretable ml review (murdoch et al. 2019) and notes from this interpretable ml book (molnar 2019).

reviews

definitions

The definition of interpretability I find most useful is that given in murdoch et al. 2019: basically that interpretability requires a pragmatic approach in order to be useful. As such, interpretability is only defined with respect to a specific audience + problem and an interpretation should be evaluated in terms of how well it benefits a specific context. It has been defined and studied more broadly in a variety of works:

- Explore, Explain and Examine Predictive Models (biecek & burzykowski, in progress) - another book on exploratory analysis with interpretability

- Explanation Methods in Deep Learning: Users, Values, Concerns and Challenges (ras et al. 2018)

- Explainable Deep Learning: A Field Guide for the Uninitiated

- Interpretable Deep Learning in Drug Discovery

- Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges

- Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI

- Against Interpretability: a Critical Examination of the Interpretability Problem in Machine Learning - “where possible, discussion should be reformulated in terms of the ends of interpretability”

overviews

- Towards a Generic Framework for Black-box Explanation Methods (henin & metayer 2019)

- sampling - selection of inputs to submit to the system to be explained

- generation - analysis of links between selected inputs and corresponding outputs to generate explanations

- proxy - approximates model (ex. rule list, linear model)

- explanation generation - explains the proxy (ex. just give most important 2 features in rule list proxy, ex. LIME gives coefficients of linear model, Shap: sums of elements)

- interaction (with the user)

- this is a super useful way to think about explanations (especially local), but doesn’t work for SHAP / CD which are more about how much a variable contributes rather than a local approximation

- feature (variable) importance measurement review (VIM) (wei et al. 2015)

- often-termed sensitivity, contribution, or impact

- some of these can be applied to data directly w/out model (e.g. correlation coefficient, rank correlation coefficient, moment-independent VIMs)

- Pitfalls to Avoid when Interpreting Machine Learning Models (molnar et al. 2020)

- Feature Removal Is a Unifying Principle for Model Explanation Methods (covert, lundberg, & lee 2020)

- Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges (rudin et al. ‘21)

evaluating interpretability

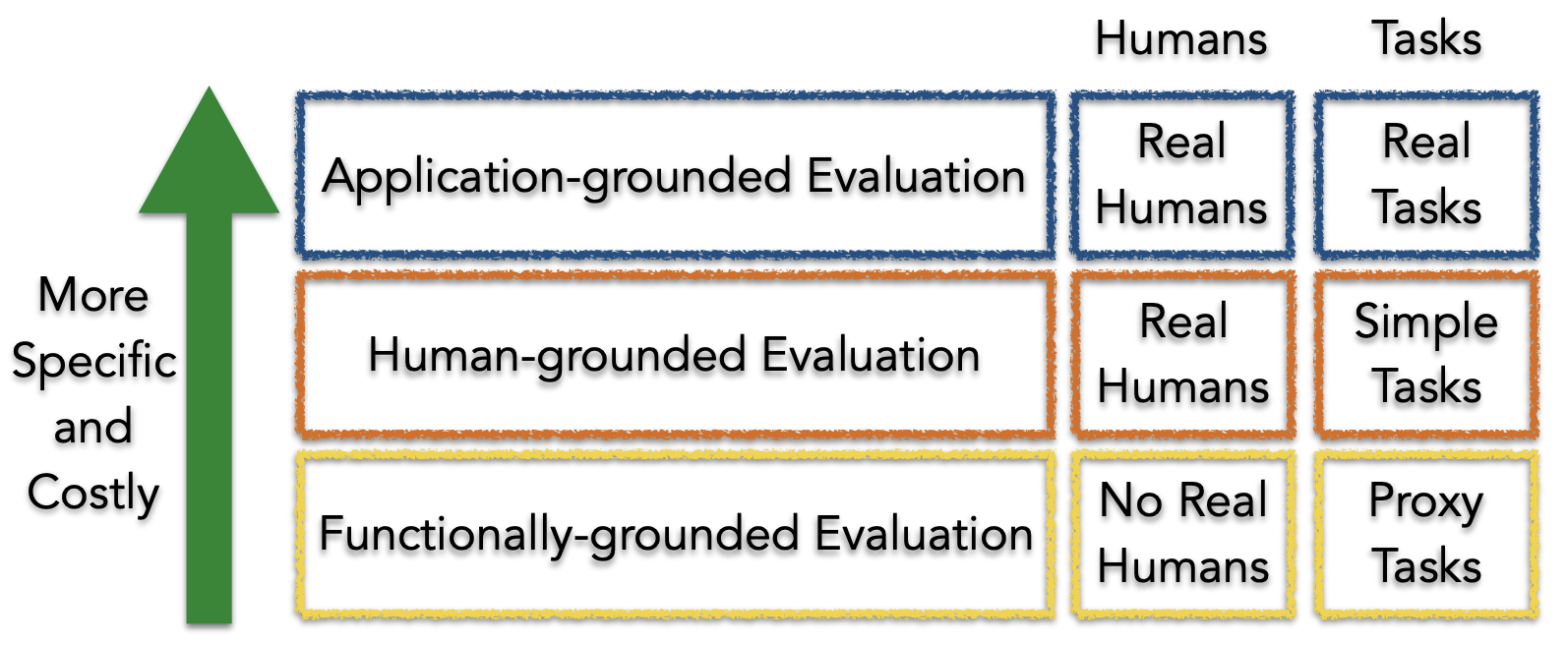

Evaluating interpretability can be very difficult (largely because it rarely makes sense to talk about interpretability outside of a specific context). The best possible evaluation of interpretability requires benchmarking it with respect to the relevant audience in a context. For example, if an interpretation claims to help understand radiology models, it should be tested based on how well it helps radiologists when actually making diagnoses. The papers here try to find more generic alternative ways to evaluate interp methods (or just define desiderata to do so).

- Towards A Rigorous Science of Interpretable Machine Learning (doshi-velez & kim 2017)

- Interpreting Interpretability: Understanding Data Scientists’ Use of Interpretability Tools for Machine Learning (kaur, …, caruana, wallach, vaughan, 2020)

- used contextual inquiry + survey of data scientists (SHAP & InterpretML GAMs)

- results indicate that data scientists over-trust and misuse interpretability tools

- few of our participants were able to accurately describe the visualizations output by these tools

- Benchmarking Attribution Methods with Relative Feature Importance (yang & kim 2019)

- train a classifier, add random stuff (like dogs) to the image, classifier should assign them little importance

- Visualizing the Impact of Feature Attribution Baselines

- top-k-ablation: should identify top pixels, ablate them, and want it to actually decrease

- center-of-mass ablation: also could identify center of mass of saliency map and blur a box around it (to avoid destroying feature correlations in the model)

- should we be true-to-the-model or true-to-the-data?

- Evaluating Feature Importance Estimates (hooker et al. 2019)

- remove-and-retrain test accuracy decrease

- Quantifying Interpretability of Arbitrary Machine Learning Models Through Functional Decomposition (molnar 2019)

- An Evaluation of the Human-Interpretability of Explanation (lage et al. 2019)

- Multi-value Rule Sets for Interpretable Classification with Feature-Efficient Representations (wang, 2018)

- measure how long it takes for people to calculate predictions from different rule-based models

- On the (In)fidelity and Sensitivity for Explanations

- Benchmarking Attribution Methods with Relative Feature Importance (yang & kim 2019)

- Do Explanations Reflect Decisions? A Machine-centric Strategy to Quantify the Performance of Explainability Algorithms

- On Validating, Repairing and Refining Heuristic ML Explanations

- How Much Can We See? A Note on Quantifying Explainability of Machine Learning Models

- Manipulating and Measuring Model Interpretability (sangdeh et al. … wallach 2019)

- participants who were shown a clear model with a small number of features were better able to simulate the model’s predictions

- no improvements in the degree to which participants followed the model’s predictions when it was beneficial to do so.

- increased transparency hampered people’s ability to detect when the model makes a sizable mistake and correct for it, seemingly due to information overload

- Towards a Framework for Validating Machine Learning Results in Medical Imaging

- An Integrative 3C evaluation framework for Explainable Artificial Intelligence

- Evaluating Explanation Without Ground Truth in Interpretable Machine Learning (yang et al. 2019)

- predictability (does the knowledge in the explanation generalize well)

- fidelity (does explanation reflect the target system well)

- persuasibility (does human satisfy or comprehend explanation well)

basic failures

- Sanity Checks for Saliency Maps (adebayo et al. 2018)

- Model Parameter Randomization Test - attributions should be different for trained vs random model, but they aren’t for many attribution methods

- Rethinking the Role of Gradient-based Attribution Methods for Model Interpretability (srinivas & fleuret, 2021)

- logits can be arbitrarily shifted without affecting preds / gradient-based explanations

-

gradient-based explanations then, don’t necessarily capture info about $p_\theta(y x)$

- Assessing the (Un)Trustworthiness of Saliency Maps for Localizing Abnormalities in Medical Imaging (arun et al. 2020) - CXR images from SIIM-ACR Pneumothorax Segmentation + RSNA Pneumonia Detection

- metrics: localizers (do they overlap with GT segs/bounding boxes), variation with model weight randomization, repeatable (i.e. same after retraining?), reproducibility (i.e. same after training different model?)

- Interpretable Deep Learning under Fire (zhang et al. 2019)

- Wittgenstein: “if a lion could speak, we could not understand him.”

adv. vulnerabilities

- Interpretation of Neural Networks is Fragile (ghorbani et al. 2018)

- minor perturbations to inputs can drastically change DNN interpretations

- How can we fool LIME and SHAP? Adversarial Attacks on Post hoc Explanation Methods (slack, …, singh, lakkaraju, 2020)

- we can build classifiers which use important features (such as race) but explanations will not reflect that

- basically classifier is different on X which is OOD (and used by LIME and SHAP)

- Fooling Neural Network Interpretations via Adversarial Model Manipulation (heo, joo, & moon 2019) - can change model weights so that it keeps predictive accuracy but changes its interpretation

- motivation: could falsely look like a model is “fair” because it places little saliency on sensitive attributes

- output of model can still be checked regardless

- fooled interpretation generalizes to entire validation set

- can force the new saliency to be whatever we like

- passive fooling - highlighting uninformative pixels of the image

- active fooling - highlighting a completely different object, the firetruck

- model does not actually change that much - predictions when manipulating pixels in order of saliency remains similar, very different from random (fig 4)

- motivation: could falsely look like a model is “fair” because it places little saliency on sensitive attributes

- Counterfactual Explanations Can Be Manipulated (slack,…,singh, 2021) - minor changes in the training objective can drastically change counterfactual explanations

intrinsic interpretability (i.e. how can we fit a simpler model)

For an implementation of many of these models, see the python imodels package.

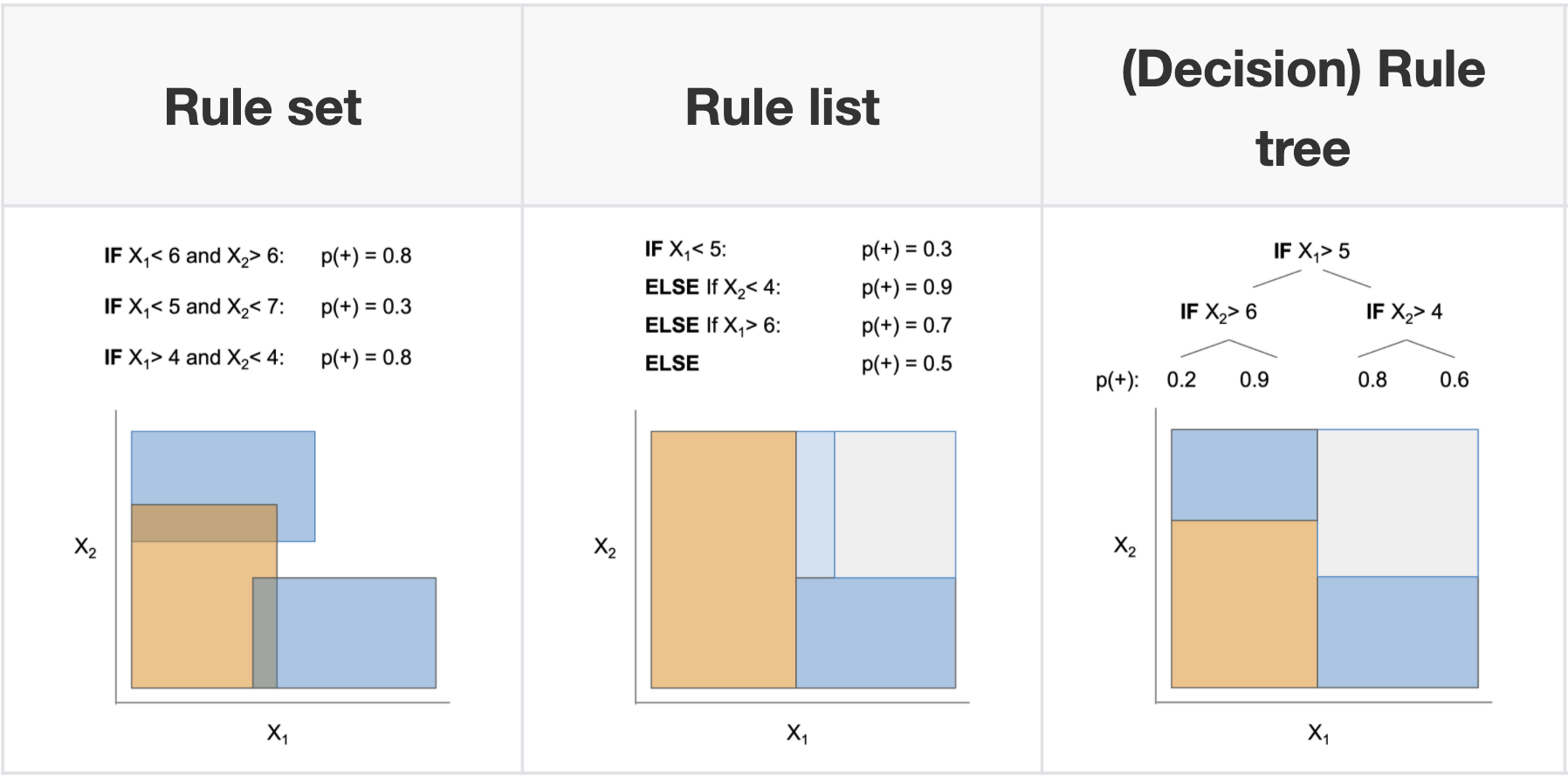

decision rules overview

📌 see also notes on logic

- 2 basic concepts for a rule

- converage = support

- accuracy = confidence = consistency

- measures for rules: precision, info gain, correlation, m-estimate, Laplace estimate

- these algorithms usually don’t support regression, but you can get regression by cutting the outcome into intervals

- why might these be useful?

- The Magical Mystery Four: How is Working Memory Capacity Limited, and Why? (cowan, 2010) - a central memory store limited to 3 to 5 meaningful items in young adults

- connections

- every decision list is a (one-sided) decision tree

- every decision tree can be expressed as an equivalent decision list (by listing each path to a leaf as a decision rule)

- leaves of a decision tree (or a decision list) form a decision set

- recent work directly optimizes the performance metric (e.g., accuracy) with soft or hard sparsity constraints on the tree size, where sparsity is measured by the number of leaves in the tree using:

- mathematical programming, including mixed integer programming (MIP) / SAT solvers

- stochastic search through the space of trees

- customized dynamic programming algorithms that incorporate branch-and-bound techniques for reducing the size of the search space

rule sets

Rule sets commonly look like a series of independent if-then rules. Unlike trees / lists, these rules can be overlapping and might not cover the whole space. Final predictions can be made via majority vote, using most accurate rule, or averaging predictions. Sometimes also called rule ensembles.

- popular ways to learn rule sets

- SLIPPER (cohen, & singer, 1999) - repeatedly boosting a simple, greedy rule-builder

- Lightweight Rule Induction (weiss & indurkhya, 2000) - specify number + size of rules and classify via majority vote

- Maximum Likelihood Rule Ensembles (Dembczyński et al. 2008) - MLRules - rule is base estimator in ensemble - build by greedily maximizing log-likelihood

- rulefit (friedman & popescu, 2008) - extract rules from many decision trees, then fit sparse linear model on them

- A statistical approach to rule learning (ruckert & kramer, 2006) - unsupervised objective to mine rules with large maring and low variance before fitting linear model

- Generalized Linear Rule Models (wei et al. 2019) - use column generation (CG) to intelligently search space of rules

- re-fit GLM as rules are generated, reweighting + discarding

- with large number of columns, can be intractable even to enumerate rules - CG avoids this by fitting a subset and using it to construct most promising next column

- also propose a non-CG algorithm using only 1st-degree rules

- note: from every pair of complementary singleton rules (e.g., $X_j \leq1$, $X_j > 1$), they remove one member as otherwise the pair together is collinear

- re-fit GLM as rules are generated, reweighting + discarding

- Multivariate Adaptive Regression Splines (MARS) (friedman, 1991) - sequentially learn weighted linear sum of ReLus (or products of ReLus)

- do backward deletion procedure at the end

- MARS via LASSO (ki, fang, & guntuboyina, 2021) - MARS but use LASSO

- Hardy-Krause denoising (fang, guntuboyina, & sen, 2020) - builds additive models of piecewise constant functions along with interactions and then does LASSO on them

- more recent global versions of learning rule sets

- interpretable decision set (lakkaraju et al. 2016) - set of if then rules

- short, accurate, and non-overlapping rules that cover the whole feature space and pay attention to small but important classes

- A Bayesian Framework for Learning Rule Sets for Interpretable Classification (wang et al. 2017) - rules are a bunch of clauses OR’d together (e.g. if (X1>0 AND X2<1) OR (X2<1 AND X3>1) OR … then Y=1)

- they call this method “Bayesian Rule Sets”

- Or’s of And’s for Interpretable Classification, with Application to Context-Aware Recommender Systems (wang et al. 2015) - BOA - Bayesian Or’s of And’s

- Vanishing boosted weights: A consistent algorithm to learn interpretable rules (sokolovska et al. 2021) - simple efficient fine-tuning procedure for decision stumps

- interpretable decision set (lakkaraju et al. 2016) - set of if then rules

- when learning sequentially, often useful to prune at each step (Furnkranz, 1997)

rule lists

- oneR algorithm - select feature that carries most information about the outcome and then split multiple times on that feature

- sequential covering - keep trying to cover more points sequentially

- pre-mining frequent patterns (want them to apply to a large amount of data and not have too many conditions)

- FP-Growth algorithm (borgelt 2005) is fast

- Aprior + Eclat do the same thing, but with different speeds

- interpretable classifiers using rules and bayesian analysis (letham et al. 2015)

- start by pre-mining frequent patterns rules

- current approach does not allow for negation (e.g. not diabetes) and must split continuous variables into categorical somehow (e.g. quartiles)

- mines things that frequently occur together, but doesn’t look at outcomes in this step - okay (since this is all about finding rules with high support)

- learn rules w/ prior for short rule conditions and short lists

- start w/ random list

- sample new lists by adding/removing/moving a rule

- at the end, return the list that had the highest probability

- scalable bayesian rule lists (yang et al. 2017) - faster algorithm for computing

- doesn’t return entire posterior

- learning certifiably optimal rules lists (angelino et al. 2017) - even faster optimization for categorical feature space

- can get upper / lower bounds for loss = risk + $\lambda$ * listLength

- doesn’t return entire posterior

- start by pre-mining frequent patterns rules

- Expert-augmented machine learning (gennatas et al. 2019)

- make rule lists, then compare the outcomes for each rule with what clinicians think should be outcome for each rule

- look at rules with biggest disagreement and engineer/improve rules or penalize unreliable rules

- Fast and frugal heuristics: The adaptive toolbox. - APA PsycNET (gigerenzer et al. 1999) - makes rule lists that can split on either node of the tree each time

trees

Trees suffer from the fact that they have to cover the entire decision space and often we end up with replicated subtrees.

- optimal trees

- motivation

- cost-complexity pruning (breiman et al. 1984 ch 3) - greedily prune while minimizing loss function of loss + $\lambda \cdot (\text{numLeaves})$

- replicated subtree problem (Bagallo & Haussler, 1990) - they propose iterative algorithms to try to overcome it

- Generalized and Scalable Optimal Sparse Decision Trees (lin…rudin, seltzer, 2020)

- optimize for $\min L(X, y) + \lambda \cdot (\text{numLeaves})$

- full decision tree optimization is NP-hard (Laurent & Rivest, 1976)

- can optimize many different losses (e.g. accuracy, AUC)

- speedups: use dynamic programming, prune the search-space with bounds

- How Smart Guessing Strategies Can Yield Massive Scalability Improvements for Sparse Decision Tree Optimization (mctavish…rudin, seltzer, 2021)

- hash trees with bit-vectors that represent similar trees using shared subtrees

- tree is a set of leaves

- derive many bounds

- e.g. if know best loss so far, know shouldn’t add too many leaves since each adds $\lambda$ to the total loss

- e.g. similar-support bound - if two features are similar, then bounds for splitting on the first can be used to obtain bounds for the second

- optimal sparse decision trees (hu et al. 2019) - previous paper, slower

- bounds: Upper Bound on Number of Leaves, Leaf Permutation Bound

- optimal classification trees methodology paper (bertsimas & dunn, 2017) - solve optimal tree with expensive, mixed-integer optimization - realistically, usually too slow

- $\begin{array}{cl}

\min & \overbrace{R_{x y}(T)}^{\text{misclassification err}}+\alpha|T|

\text { s.t. } & N_{x}(l) \geq N_{\min } \quad \forall l \in \text { leaves }(T) \end{array}$ -

$ T $ is the number of branch nodes in tree $T$ - $N_x(l)$ is the number of training points contained in leaf node $l$

- optimal classification trees vs PECARN (bertsimas et al. 2019)

- $\begin{array}{cl}

\min & \overbrace{R_{x y}(T)}^{\text{misclassification err}}+\alpha|T|

- Learning Optimal Fair Classification Trees (jo et al. 2022)

- Better Short than Greedy: Interpretable Models through Optimal Rule Boosting (boley, …, webb, 2021) - find optimal tree ensemble (only works for very small data)

- motivation

- connections with boosting

- Fast Interpretable Greedy-Tree Sums (FIGS) (tan et al. 2022) - extend cart to learn concise tree ensembles 🌳 ➡️ 🌱+🌱

- very nice results for generalization + disentanglement

- AdaTree - learn Adaboost stumps then rewrite as a tree (grossmann, 2004) 🌱+🌱 ➡️ 🌳

- note: easy to rewrite boosted stumps as tree (just repeat each stump for each node at a given depth)

- MediBoost - again, learn boosted stumps then rewrite as a tree (valdes…solberg 2016) 🌱+🌱 ➡️ 🌳 but with 2 tweaks:

- shrinkage: use membership function that accelerates convergence to a decision (basically shrinkage during boosting)

- prune the tree in a manner that does not affect the tree’s predictions

- prunes branches that are impossible to reach by tracking the valid domain for every attribute (during training)

- post-prune the tree bottom-up by recursively eliminating the parent nodes of leaves with identical predictions

- AddTree = additive tree - learn single tree, but rather than only current node’s data to decide the next split, also allow the remaining data to also influence this split, although with a potentially differing weight (luna, …, friedman, solberg, valdes, 2019)

- the weight is chosen as a hyperparameter

- Fast Interpretable Greedy-Tree Sums (FIGS) (tan et al. 2022) - extend cart to learn concise tree ensembles 🌳 ➡️ 🌱+🌱

- bayesian trees

- Bayesian Treed Models (chipman et al. 2001) - impose priors on tree parameters

- treed models - fit a model (e.g. linear regression) in leaf nodes

- tree structure e.g. depth, splitting criteria

- values in terminal nodes coditioned on tree structure

- residual noise’s standard deviation

- Stochastic gradient boosting (friedman 2002) - boosting where at iteration a subsample of the training data is used

- BART: Bayesian additive regression trees (chipman et al. 2008) - learns an ensemble of tree models using MCMC on a distr. imbued with a prior (not interpretable)

- pre-specify number of trees in ensemble

- MCMC step: add split, remove split, switch split

- cycles through the trees one at a time

- Bayesian Treed Models (chipman et al. 2001) - impose priors on tree parameters

- history

- automatic interaction detection (AID) regression trees (Morgan & Sonquist, 1963)

- THeta Automatic Interaction Detection (THAID) classification trees (Messenger & Mandell, 1972)

- Chi-squared Automatic Interaction Detector (CHAID) (Kass, 1980)

- CART: Classification And Regression Trees (Breiman et al. 1984) - splits on GINI

- ID3 (Quinlan, 1986)

- C4.5 (Quinlan, 1993) - splits on binary entropy instead of GINI

- open problems

- ensemble methods

- improvements in splitting criteria, missing variables

- longitudinal data, survival curves

- misc

- counterfactuals

- regularization

- Hierarchical Shrinkage: improving accuracy and interpretability of tree-based methods (agarwal et al. 2021) - post-hoc shrinkage improves trees

- Hierarchical priors for Bayesian CART shrinkage (chipman & mcculloch, 2000)

- Connecting Interpretability and Robustness in Decision Trees through Separation (moshkovitz et al. 2021)

- Efficient Training of Robust Decision Trees Against Adversarial Examples

- Robust Decision Trees Against Adversarial Examples (chen, …, hsieh, 2019)

- optimize tree performance under worst-case input-feature perturbation

- On the price of explainability for some clustering problems (laber et al. 2021) - trees for clustering

- extremely randomized trees (geurts et al. 2006) - randomness goes further than Random Forest - randomly select not only the feature but also the split thresholds (and select the best out of some random set)

linear (+algebraic) models

supersparse models

- four main types of approaches to building scoring systems

- exact solutions using optimization techniques (often use MIP)

- approximation algorithms using linear programming (use L1 penalty instead of L0)

- can also try sampling

- more sophisticated rounding techniques - e.g. random, constrain sum, round each coef sequentially

- computeraided exploration techniques

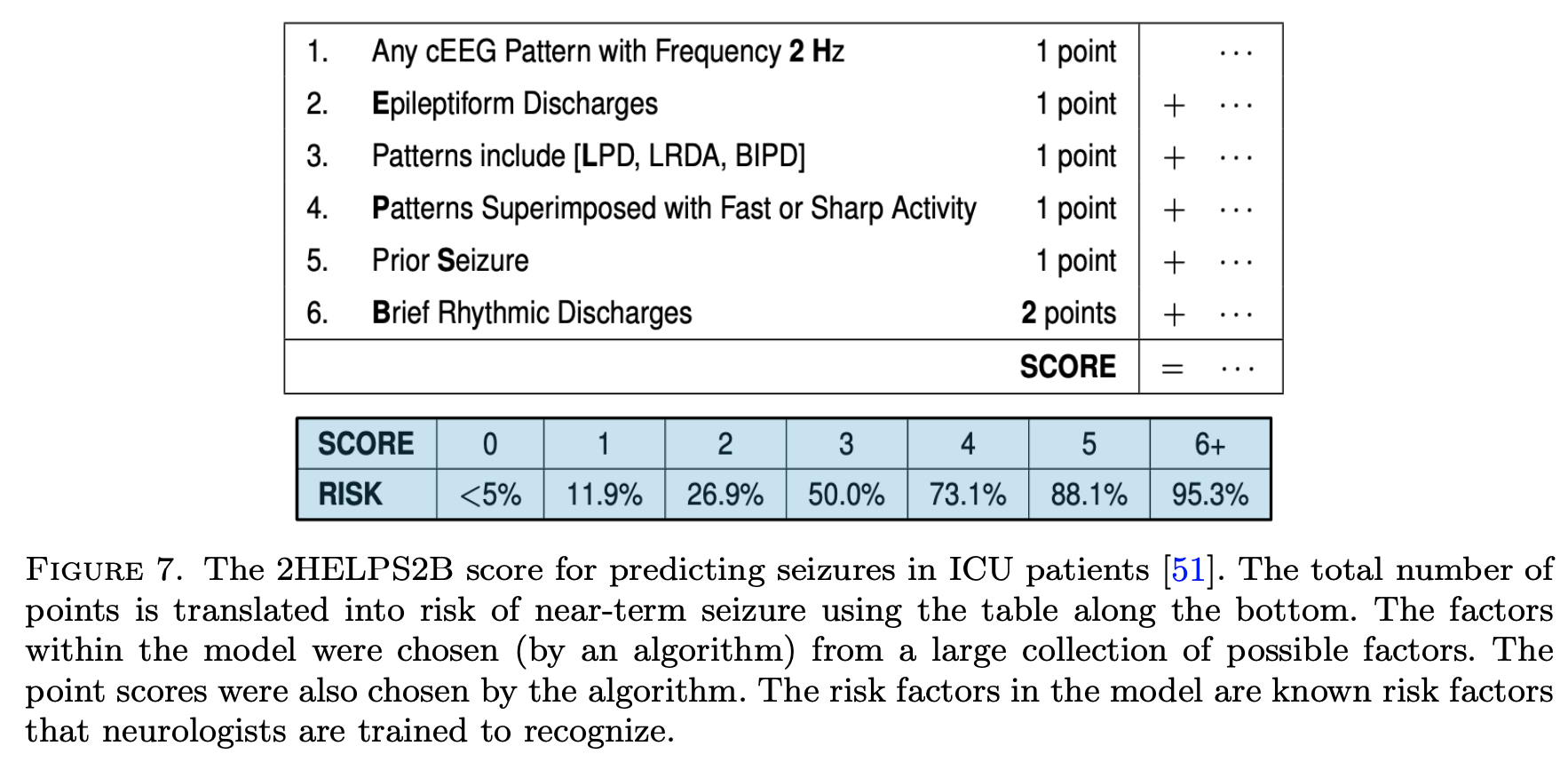

- Supersparse linear integer models for optimized medical scoring systems (ustun & rudin 2016)

- 2helps2b paper

- note: scoring systems map points to a risk probability

- An Interpretable Model with Globally Consistent Explanations for Credit Risk (chen et al. 2018) - a 2-layer linear additive model

- Fast Sparse Classification for Generalized Linear and Additive Models (liu, …, seltzer, rudin, 2022)

gams (generalized additive models)

- gam takes form $g(\mu) = b + f_0(x_0) + f_1(x_1) + f_2(x_2) + …$

- usually assume some basis for the $f$, like splines or polynomials (and we select how many either manually or with some complexity penalty)

- backfitting - traditional way to fit - each $f_i$ is fitted sequentially to the residuals of the previously fitted $f_0,…,f_{i-1}$ (hastie & tibshirani, 1989)

- boosting - fit all $f$ simultaneously, e.g. one tree for each $f_i$ on each iteration

- interpretability depends on (1) transparency of $f_i$ and (2) number of terms

- can also add in interaction terms (e.g. $f_i(x_1, x_2)$), but need a way to rank which interactions to add (see notes on interactions)

- NODE-GAM: Neural Generalized Additive Model for Interpretable Deep Learning (chang, caruana, & goldenberg, 2021)

- includes interaction terms (all features are used initially and backprop decides which are kept) - they call this $GA^2M$

- Creating Powerful and Interpretable Models with Regression Networks (2021) - generalizes neural GAM to include interaction terms

- train first-order functions

- fix them and predict residuals with next order (and repeat for as many orders as desired)

- Neural Additive Models: Interpretable Machine Learning with Neural Nets - GAM where we learn $f$ with a neural net

- InterpretML: A Unified Framework for Machine Learning Interpretability (nori…caruana 2019) - software package mostly focused on explainable boosting machine (EBM)

- EBM - GAM which uses boosted decision trees as $f_i$

- Accuracy, Interpretability, and Differential Privacy via Explainable Boosting (nori, caruana et al. 2021)

- added differential privacy to explainable boosting

- misc improvements

- GAM Changer: Editing Generalized Additive Models with Interactive Visualization (wang…caruana et al. 2021) - really nice gui

- Axiomatic Interpretability for Multiclass Additive Models (zhang, tan, … caruana, 2019)

- extend GAM to multiclass and improve visualizations in that setting

- Sparse Partially Linear Additive Models (lou, bien, caruana & gehrke, 2015) - some terms are linear and some use $f_i(x_i)$

symbolic regression

Symbolic regression learns a symbolic (e.g. a mathematical formula) for a function from data priors on what kinds of symboles (e.g. sin, exp) are more “difficult”

- Demystifying Black-box Models with Symbolic Metamodels

- GAM parameterized with Meijer G-functions (rather than pre-specifying some forms, as is done with symbolic regression)

- Logic Regression (ruczinski, kooperberg & leblanc, 2012) - given binary input variables, automatically construct interaction terms and linear model (fit using simulated annealing)

- Building and Evaluating Interpretable Models using Symbolic Regression and Generalized Additive Models

- gams - assume model form is additive combination of some funcs, then solve via GD

- however, if we don’t know the form of the model we must generate it

- Bridging the Gap: Providing Post-Hoc Symbolic Explanations for Sequential Decision-Making Problems with Black Box Simulators

- Model Learning with Personalized Interpretability Estimation (ML-PIE) - use human feedback in the loop to decide which symbolic functions are most interpretable

example-based = case-based (e.g. prototypes, nearest neighbor)

- ProtoPNet: This looks like that (2nd paper) (chen, …, rudin, 2018) - learn convolutional prototypes that are smaller than the original input size

-

use L2 distance in repr space to measure distance between patches and prototypes

- loss function

- require the filters to be identical to the latent representation of some training image patch

- cluster image patches of a particular class around the prototypes of the same class, while separating image patches of different classes

- maxpool class prototypes so spatial location doesn’t matter

- also get heatmap of where prototype was activated (only max really matters)

- train in 3 steps

- train everything: classification + clustering around intraclass prototypes + separation between interclass prototypes (last layer fixed to 1s / -0.5s)

- project prototypes to data patches

- learn last (linear) layer

- ProtoNets: original prototypes paper (li, …, rudin, 2017)

- uses encoder/decoder setup

- encourage every prototype to be similar to at least one encoded input

- results: learned prototypes in fact look like digits

- correct class prototypes go to correct classes

- loss: classification + reconstruction + distance to a training point

-

- This Looks Like That, Because … Explaining Prototypes for Interpretable Image Recognition (nauta et al. 2020)

- add textual quantitative information about visual characteristics deemed important by the classification model e.g. colour hue, shape, texture, contrast and saturation

- Neural Prototype Trees for Interpretable Fine-Grained Image Recognition (nauta et al. 2021) - build decision trees on top of prototypes

- performance is slightly poor until they use ensembles

- XProtoNet: Diagnosis in Chest Radiography With Global and Local Explanations (kim et al. 2021)

- alter ProtoPNet to use dynamically sized patches for prototype matching rather than fixed-size patches

- TesNet: Interpretable Image Recognition by Constructing Transparent Embedding Space (wang et al. 2021) - alter ProtoPNet to get “orthogonal” basis concepts

-

ProtoPShare: Prototype Sharing for Interpretable Image Classification and Similarity Discovery (Rymarczyk et al. 2020),- share some prototypes between classes with data-dependent merge pruning

- merge “similar” prototypes, where similarity is measured as dist of all training patches in repr. space

- ProtoMIL: Multiple Instance Learning with Prototypical Parts for Fine-Grained Interpretability (Rymarczyk et al. 2021)

- Interpretable Image Classification with Differentiable Prototypes Assignment (rmyarczyk et al. 2021)

- These do not Look Like Those: An Interpretable Deep Learning Model for Image Recognition (singh & yow, 2021) - all weights for prototypes are either 1 or -1

- Towards Explainable Deep Neural Networks (xDNN) (angelov & soares 2019) - more complex version of using prototypes

- Self-Interpretable Model with Transformation Equivariant Interpretation (wang & wang, 2021)

- generate data-dependent prototypes for each class and formulate the prediction as the inner product between each prototype and the extracted features

- interpretation is hadamard product of prototype and extracted features (prediction is sum of this product)

- interpretations can be easily visualized by upsampling from the prototype space to the input data space

- regularization

- reconstruction regularizer - regularizes the interpretations to be meaningful and comprehensible

- for each image, enforce each prototype to be similar to its corresponding class’s latent repr.

- transformation regularizer - constrains the interpretations to be transformation equivariant

- reconstruction regularizer - regularizes the interpretations to be meaningful and comprehensible

- self-consistency score quantifies the robustness of interpretation by measuring the consistency of interpretations to geometric transformations

- generate data-dependent prototypes for each class and formulate the prediction as the inner product between each prototype and the extracted features

- Case-Based Reasoning for Assisting Domain Experts in Processing Fraud Alerts of Black-Box Machine Learning Models

- ProtoAttend: Attention-Based Prototypical Learning (arik & pfister, 2020) - unlike ProtoPNet, each prediction is made as a weighted combination of similar rinput samples (like nearest-neighbor)

- Explaining Latent Representations with a Corpus of Examples (crabbe, …, van der schaar 2021) - for an individual prediction,

- Which corpus examples explain the prediction issued for a given test example?

- What features of these corpus examples are relevant for the model to relate them to the test example?

interpretable neural nets

- concepts

- Concept Bottleneck Models (koh et al. 2020) - predict concepts before making final prediction

- Concept Whitening for Interpretable Image Recognition (chen et al. 2020) - force network to separate “concepts” (like in TCAV) along different axes

- Interpretability Beyond Classification Output: Semantic Bottleneck Networks - add an interpretable intermediate bottleneck representation

- How to represent part-whole hierarchies in a neural network (hinton, 2021)

- The idea is simply to use islands of identical vectors to represent the nodes in the parse tree (parse tree would be things like wheel-> cabin -> car)

- each patch / pixel gets representations at different levels (e.g. texture, parrt of wheel, part of cabin, etc.)

- each repr. is a vector - vector for high-level stuff (e.g. car) will agree for different pixels but low level (e.g. wheel) will differ

- during training, each layer at each location gets information from nearby levels

- hinton assumes weights are shared between locations (maybe don’t need to be)

- also attention mechanism across other locations in same layer

- each location also takes in its positional location (x, y)

- could have the lowest-level repr start w/ a convnet

- iCaps: An Interpretable Classifier via Disentangled Capsule Networks (jung et al. 2020)

- the class capsule also includes classification-irrelevant information

- uses a novel class-supervised disentanglement algorithm

- entities represented by the class capsule overlap

- adds additional regularizer

- the class capsule also includes classification-irrelevant information

- localization

- WILDCAT: Weakly Supervised Learning of Deep ConvNets for Image Classification, Pointwise Localization and Segmentation (durand et al. 2017) - constrains architecture

- after extracting conv features, replace linear layers with special pooling layers, which helps with spatial localization

- each class gets a pooling map

- prediction for a class is based on top-k spatial regions for a class

- finally, can combine the predictions for each class

- after extracting conv features, replace linear layers with special pooling layers, which helps with spatial localization

- Approximating CNNs with Bag-of-local-Features models works surprisingly well on ImageNet

- CNN is restricted to look at very local features only and still does well (and produces an inbuilt saliency measure)

- learn shapes not texture

- code

- Symbolic Semantic Segmentation and Interpretation of COVID-19 Lung Infections in Chest CT volumes based on Emergent Languages (chowdhury et al. 2020) - combine some segmentation with the classifier

- WILDCAT: Weakly Supervised Learning of Deep ConvNets for Image Classification, Pointwise Localization and Segmentation (durand et al. 2017) - constrains architecture

- regularization / constraints

- Sparse Epistatic Regularization of Deep Neural Networks for Inferring Fitness Functions (aghazadeh et al. 2020) - directly regularize interactions / high-order freqs in DNNs

- MonoNet: Towards Interpretable Models by Learning Monotonic Features - enforce output to be a monotonic function of individuaul features

- Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations (raissi et al. 2019) - PINN - solve PDEs by constraining neural net to predict specific parameters / derivatives

- Improved Deep Fuzzy Clustering for Accurate and Interpretable Classifiers - extract features with a DNN then do fuzzy clustering on this

- Towards Robust Interpretability with Self-Explaining Neural Networks (alvarez-melis & jaakkola 2018) - building architectures that explain their predictions

- Two Instances of Interpretable Neural Network for Universal Approximations (tjoa & cuntai, 2021) - each neuron responds to a training point

connecting dnns and rules

- interpretable

- Distilling a Neural Network Into a Soft Decision Tree (frosst & hinton 2017) - distills DNN into DNN-like tree which uses sigmoid neuron decides which path to follow

- training on distilled DNN predictions outperforms training on original labels

- to make the decision closer to a hard cut, can multiply by a large scalar before applying sigmoid

- parameters updated with backprop

- regularization to ensure that all paths are taken equally likely

- Learning Binary Decision Trees by Argmin Differentiation (zantedeschi et al. 2021)

- argmin differentiation - solving an optimization problem as a differentiable module within a parent problem tackled with gradient-based optimization methods

- relax hard splits into soft ones and learn via gradient descent

-

[Optimizing for Interpretability in Deep Neural Networks with Tree Regularization Journal of Artificial Intelligence Research](https://www.jair.org/index.php/jair/article/view/12558) (wu…doshi-velez, 2021) - regularize DNN prediction function towards tree (potentially only for some region) - Adaptive Neural Trees (tanno et al. 2019) - adaptive neural tree mechanism with trainable nodes, edges, and leaves

- Distilling a Neural Network Into a Soft Decision Tree (frosst & hinton 2017) - distills DNN into DNN-like tree which uses sigmoid neuron decides which path to follow

- loosely interpretable

- Attention Convolutional Binary Neural Tree for Fine-Grained Visual Categorization (ji et al. 2020)

- Oblique Decision Trees from Derivatives of ReLU Networks (lee & jaakkola, 2020)

- locally constant networks (which are derivatives of relu networks) are equivalent to trees

- they perform well and can use DNN tools e.g. Dropconnect on them

- note: deriv wrt to input can be high-dim

- they define locally constant network LCN scalar prediction as derivative wrt every parameter transposed with the activations of every corresponding neuron

- approximately locally constant network ALCN: replace Relu $\max(0, x)$ with softplus $1+\exp(x)$

- ensemble locally constant net ELCN - enesemble of LCN or ALCN (they train via boosting)

- TAO: Alternating optimization of decision trees, with application to learning sparse oblique trees (carreira-perpinan, tavallali, 2018)

- Minimize loss function with sparsity of features at each node + predictive performance

- Algorithm: update each node one at a time while keeping all others fixed (finds a local optimum of loss)

- Fast for 2 reasons

- separability - nodes which aren’t on same path from root-to-leaf can be optimized separately

- reduced problem - for any given node, we only solve a binary classification where the labels are the predictions that a point would be given if it were sent left/right

- in this work, solve binary classification by approximating it with sparse logistic regression

- TAO trees with boosting performs well + works for regression (Zharmagambetov and Carreira-Perpinan, 2020)

- TAO trees with bagging performs well (Carreira-Perpiñán & Zharmagambetov, 2020)

- Learning a Tree of Neural Nets (Zharmagambetov and Carreira-Perpinan, 2020) - use neural net rather than binary classification at each node

- Also use TAO trained on neural net features do speed-up/improve the network

- incorporating prior knowledge

- Controlling Neural Networks with Rule Representations (seo, …, pfister, 21)

- DEEPCTRL - encodes rules into DNN

- one encoder for rules, one for data

- both are concatenated with stochastic parameter $\alpha$ (which also weights the loss)

- at test-time, can select $\alpha$ to vary contribution of rule part can be varied (e.g. if rule doesn’t apply to a certain point)

- training

- normalize losses initially to ensure they are on the same scale

- some rules can be made differentiable in a straightforward way: $r(x, \hat y) \leq \tau \to \max (r(x, \hat y ) - \tau, 0)$, but can’t do this for everything e.g. decision tree rules

- rule-based loss is defined by looking at predictions fo perturbations of the input

- evaluation

verification ratio- fraction of samples that satisfy the rule

- see also Lagrangian Duality for Constrained Deep Learning (fioretto et al. 2020)

- one encoder for rules, one for data

- DEEPCTRL - encodes rules into DNN

- RRL: A Scalable Classifier for Interpretable Rule-Based Representation Learning (wang et al. 2020)

- Rule-based Representation Learner (RRL) - automatically learns interpretable non-fuzzy rules for data representation

- project RRL it to a continuous space and propose a novel training method, called Gradient Grafting, that can directly optimize the discrete model using gradient descent

- Harnessing Deep Neural Networks with Logic Rules (hu, …, xing, 2020) - iterative distillation method that transfers the structured information of logic rules into the weights of neural networks

- Controlling Neural Networks with Rule Representations (seo, …, pfister, 21)

- just trying to improve performance

- Neural Random Forests (biau et al. 2018) - convert DNN to RF

- first layer learns a node for each split

- second layer learns a node for each leaf (by only connecting to nodes on leaves in the path)

- finally map each leaf to a value

- relax + retrain

- Gradient Boosted Decision Tree Neural Network - build DNN based on decision tree ensemble - basically the same but with gradient-boosted trees

- these papers are basically learning trees via backprop, where splits are “smoothed” during training

- Deep Neural Decision Forests (2015)

- dnn learns small intermediate representation, which outputs all possible splits in a tree

- these splits are forced into a tree-structure and optimized via SGD

- neurons use sigmoid function

- Neural Decision Trees - treat each neural net like a node in a tree

- Differentiable Pattern Set Mining (fischer & vreeken, 2021)

- use neural autoencoder with binary activations + binarizing weights

- optimizing a data-sparsity aware reconstruction loss, continuous versions of the weights are learned in small, noisy steps

- Neural Random Forests (biau et al. 2018) - convert DNN to RF

constrained models

- different constraints in tensorflow lattice

- e.g. monoticity, convexity, unimodality (unique peak), pairwise trust (model has higher slope for one feature when another feature is in particular value range)

- e.g. regularizers = laplacian (flatter), hessian (linear), wrinkle (smoother), torsion (independence between feature contributions)

- lattice regression (garcia & gupta, 2009) - learn keypoints of look-up table and at inference time interpolate the table

- to learn, view as kernel method and then learn linear function in the kernel space

- Monotonic Calibrated Interpolated Look-Up Tables (gupta et al. 2016)

- speed up $D$-dimensional interpolation to $O(D \log D)$

- follow-up work: Deep Lattice Networks and Partial Monotonic Functions (you,…,gupta, 2017) - use many layers

- monotonicity constrainits in histogram-based gradient boosting (see sklearn)

misc models

- learning AND-OR Templates for Object Recognition and Detection (zhu_13)

- ross et al. - constraing model during training

- scat transform idea (mallat_16 rvw, oyallan_17)

- force interpretable description by piping through something interpretable (ex. tenenbaum scene de-rendering)

- learn concepts through probabilistic program induction

- force biphysically plausible learning rules

- The Convolutional Tsetlin Machine - uses easy-to-interpret conjunctive clauses

- Beyond Sparsity: Tree Regularization of Deep Models for Interpretability

- regularize so that deep model can be closely modeled by tree w/ few nodes

- Tensor networks - like DNN that only takes boolean inputs and deals with interactions explicitly

- widely used in physics

- Coefficient tree regression: fast, accurate and interpretable predictive modeling (surer, apley, & malthouse, 2021) - iteratively group linear terms with similar coefficients into a bigger term

bayesian models

programs

- program synthesis - automatically find a program in an underlying programming language that satisfies some user intent

- ex. program induction - given a dataset consisting of input/output pairs, generate a (simple?) program that produces the same pairs

- Programs as Black-Box Explanations (singh et al. 2016)

- probabilistic programming - specify graphical models via a programming language

posthoc interpretability (i.e. how can we interpret a fitted model)

Note that in this section we also include importances that work directly on the data (e.g. we do not first fit a model, rather we do nonparametric calculations of importance)

model-agnostic

- local surrogate (LIME) - fit a simple model locally to on point and interpret that

- select data perturbations and get new predictions

- for tabular data, this is just varying the values around the prediction

- for images, this is turning superpixels on/off

- superpixels determined in unsupervised way

- weight the new samples based on their proximity

- train a kernel-weighted, interpretable model on these points

- LEMNA - like lime but uses lasso + small changes

- select data perturbations and get new predictions

- anchors (ribeiro et al. 2018) - find biggest square region of input space that contains input and preserves same output (with high precision)

- does this search via iterative rules

- What made you do this? Understanding black-box decisions with sufficient input subsets

- want to find smallest subsets of features which can produce the prediction

- other features are masked or imputed

- want to find smallest subsets of features which can produce the prediction

- VIN (hooker 04) - variable interaction networks - globel explanation based on detecting additive structure in a black-box, based on ANOVA

- local-gradient (bahrens et al. 2010) - direction of highest slope towards a particular class / other class

- golden eye (henelius et al. 2014) - randomize different groups of features and search for groups which interact

- shapley value - average marginal contribution of a feature value across all possible sets of feature values

- “how much does prediction change on average when this feature is added?”

- tells us the difference between the actual prediction and the average prediction

- estimating: all possible sets of feature values have to be evaluated with and without the j-th feature

- this includes sets of different sizes

- to evaluate, take expectation over all the other variables, fixing this variables value

- shapley sampling value - sample instead of exactly computing

- quantitative input influence is similar to this…

- satisfies 3 properties

- local accuracy - basically, explanation scores sum to original prediction

- missingness - features with $x’_i=0$ have 0 impact

- consistency - if a model changes so that some simplified input’s contribution increases or stays the same regardless of the other inputs, that input’s attribution should not decrease.

- interpretation: Given the current set of feature values, the contribution of a feature value to the difference between the actual prediction and the mean prediction is the estimated Shapley value

- recalculate via sampling other features in expectation

- followup propagating shapley values (chen, lundberg, & lee 2019) - can work with stacks of different models

- averaging these across dataset can be misleading (okeson et al. 2021)

- probes - check if a representation (e.g. BERT embeddings) learned a certain property (e.g. POS tagging) by seeing if we can predict this property (maybe linearly) directly from the representation

- problem: if the post-hoc probe is a complex model (e.g. MLP), it can accurately predict a property even if that property isn’t really contained in the representation

- potential solution: benchmark against control tasks, where we construct a new random task to predict given a representation, and see how well the post-hoc probe can do on that task

- Explaining individual predictions when features are dependent: More accurate approximations to Shapley values (aas et al. 2019) - tries to more accurately compute conditional expectation

- Feature relevance quantification in explainable AI: A causal problem (janzing et al. 2019) - argues we should just use unconditional expectation

- quantitative input influence - similar to shap but more general

- permutation importance - increase in the prediction error after we permuted the feature’s values

- $\mathbb E[Y] - \mathbb E[Y\vert X_{\sim i}]$

- If features are correlated, the permutation feature importance can be biased by unrealistic data instances (PDP problem)

- not the same as model variance

- Adding a correlated feature can decrease the importance of the associated feature

- L2X: information-theoretical local approximation (chen et al. 2018) - locally assign feature importance based on mutual information with function

- Learning Explainable Models Using Attribution Priors + Expected Gradients - like doing integrated gradients in many directions (e.g. by using other points in the training batch as the baseline)

- can use this prior to help improve performance

- Variable Importance Clouds: A Way to Explore Variable Importance for the Set of Good Models

- All Models are Wrong, but Many are Useful: Learning a Variable’s Importance by Studying an Entire Class of Prediction Models Simultaneously (Aaron, Rudin, & Dominici 2018)

- Interpreting Black Box Models via Hypothesis Testing

vim (variable importance measure) framework

- VIM

- a quantitative indicator that quantifies the change of model output value w.r.t. the change or permutation of one or a set of input variables

- an indicator that quantifies the contribution of the uncertainties of one or a set of input variables to the uncertainty of model output variable

- an indicator that quantifies the strength of dependence between the model output variable and one or a set of input variables.

- difference-based - deriv=based methods, local importance measure, morris’ screening method

- LIM (local importance measure) - like LIME

- can normalize weights by values of x, y, or ratios of their standard deviations

- can also decompose variance to get the covariances between different variables

- can approximate derivative via adjoint method or smth else

- morris’ screening method

- take a grid of local derivs and look at the mean / std of these derivs

- can’t distinguish between nonlinearity / interaction

- using the squared derivative allows for a close connection w/ sobol’s total effect index

- can extend this to taking derivs wrt different combinations of variables

- LIM (local importance measure) - like LIME

- parametric regression

- correlation coefficient, linear reg coeffeicients

- partial correlation coefficient (PCC) - wipe out correlations due to other variables

- do a linear regression using the other variables (on both X and Y) and then look only at the residuals

- rank regression coefficient - better at capturing nonlinearity

- could also do polynomial regression

- more techniques (e.g. relative importance analysis RIA)

- nonparametric regression

- use something like LOESS, GAM, projection pursuit

- rank variables by doing greedy search (add one var at a time) and seeing which explains the most variance

- nonparametric regression

- hypothesis test

- grid-based hypothesis tests: splitting the sample space (X, Y) into grids and then testing whether the patterns of sample distributions across different grid cells are random

- ex. see if means vary

- ex. look at entropy reduction

- other hypothesis tests include the squared rank difference, 2D kolmogorov-smirnov test, and distance-based tests

- grid-based hypothesis tests: splitting the sample space (X, Y) into grids and then testing whether the patterns of sample distributions across different grid cells are random

- variance-based vim (sobol’s indices)

- ANOVA decomposition - decompose model into conditional expectations $Y = g_0 + \sum_i g_i (X_i) + \sum_i \sum_{j > i} g_{ij} (X_i, X_j) + \dots + g_{1,2,…, p}$

- $g_0 = \mathbf E (Y)\ g_i = \mathbf E(Y \vert X_i) - g_0 \ g_{ij} = \mathbf E (Y \vert X_i, X_j) - g_i - g_j - g_0\…$

- take variances of these terms

- if there are correlations between variables some of these terms can misbehave

- note: $V(Y) = \sum_i V (g_i) + \sum_i \sum_{j > i} V(g_{ij}) + … V(g_{1,2,…,p})$ - variances are orthogonal and all sum to total variance

- anova decomposition basics - factor function into means, first-order terms, and interaction terms

- $S_i$: Sobol’s main effect index: $=V(g_i)=V(E(Y \vert X_i))=V(Y)-E(V(Y \vert X_i))$

- small value indicates $X_i$ is non-influential

- usually used to select important variables

- $S_{Ti}$: Sobol’s total effect index - include all terms (even interactions) involving a variable

- equivalently, $V(Y) - V(E[Y \vert X_{\sim i}])$

- usually used to screen unimportant variables

- it is common to normalize these indices by the total variance $V(Y)$

- three methods for computation - Fourire amplitude sensitivity test, meta-model, MCMC

- when features are correlated, these can be strange (often inflating the main effects)

- can consider $X_i^{\text{Correlated}} = E(X_i \vert X_{\sim i})$ and $X_i^{\text{Uncorrelated}} = X_i - X_i^{\text{Correlated}}$

- usually used to screen unimportant variables

- this can help us understand the contributions that come from different features, as well as the correlations between features (e.g. $S_i^{\text{Uncorrelated}} = V(E[Y \vert X_i^{\text{Uncorrelated}}])/V(Y)$

- sobol indices connected to shapley value

- $SHAP_i = \underset{S, i \in S}{\sum} V(g_S) / \vert S \vert$

- sobol indices connected to shapley value

- efficiently compute SHAP values directly from data (williamson & feng, 2020 icml)

- ANOVA decomposition - decompose model into conditional expectations $Y = g_0 + \sum_i g_i (X_i) + \sum_i \sum_{j > i} g_{ij} (X_i, X_j) + \dots + g_{1,2,…, p}$

- moment-independent vim

- want more than just the variance ot the output variables

- e.g. delta index = average dist. between $f_Y(y)$ and $f_{Y \vert X_i}(y)$ when $X_i$ is fixed over its full distr.

- $\delta_i = \frac 1 2 \mathbb E \int \vert f_Y(y) - f_{Y\vert X_i} (y) \vert dy = \frac 1 2 \int \int \vert f_{Y, X_i}(y, x_i) - f_Y(y) f_{X_i}(x_i) \vert dy \,dx_i$

- moment-independent because it depends on the density, not just any moment (like measure of dependence between $y$ and $X_i$

- can also look at KL, max dist..

- graphic vim - like curves

- e.g. scatter plot, meta-model plot, regional VIMs, parametric VIMs

- CSM - relative change of model ouput mean when range of $X_i$ is reduced to any subregion

- CSV - same thing for variance

- A Simple and Effective Model-Based Variable Importance Measure

- measures the feature importance (defined as the variance of the 1D partial dependence function) of one feature conditional on different, fixed points of the other feature. When the variance is high, then the features interact with each other, if it is zero, they don’t interact.

- Learning to Explain: Generating Stable Explanations Fast - ACL Anthology (situ et al. 2021) - train a model on “teacher” importance scores (e.g. SHAP) and then use it to quickly predict importance scores on new examples

importance curves

- pdp plots - marginals (force value of plotted var to be what you want it to be)

- separate into ice plots - marginals for instance

- average of ice plots = pdp plot

- sometimes these are centered, sometimes look at derivative

- both pdp ice suffer from many points possibly not being real

- totalvis: A Principal Components Approach to Visualizing Total Effects in Black Box Models - visualize pdp plots along PC directions

- separate into ice plots - marginals for instance

- possible solution: Marginal plots M-plots (bad name - uses conditional, not marginal)

- only use points conditioned on certain variable

- problem: this bakes things in (e.g. if two features are correlated and only one important, will say both are important)

- ALE-plots - take points conditioned on value of interest, then look at differences in predictions around a window

- this gives pure effect of that var and not the others

- needs an order (i.e. might not work for caterogical)

- doesn’t give you individual curves

- recommended very highly by the book…

- they integrate as you go…

- summary: To summarize how each type of plot (PDP, M, ALE) calculates the effect of a feature at a certain grid value v:

- Partial Dependence Plots: “Let me show you what the model predicts on average when each data instance has the value v for that feature. I ignore whether the value v makes sense for all data instances.”

- M-Plots: “Let me show you what the model predicts on average for data instances that have values close to v for that feature. The effect could be due to that feature, but also due to correlated features.”

- ALE plots: “Let me show you how the model predictions change in a small “window” of the feature around v for data instances in that window.”

tree ensembles

- mean decrease impurity = MDI = Gini importance

- Breiman proposes permutation tests = MDA: Breiman, Leo. 2001. “Random Forests.” Machine Learning 45 (1). Springer: 5–32

- conditional variable importance for random forests (strobl et al. 2008)

- propose permuting conditioned on the values of variables not being permuted

- to find region in which to permute, define the grid within which the values of $X_j$ are permuted for each tree by means of the partition of the feature space induced by that tree

- many scores (such as MDI, MDA) measure marginal importance, not conditional importance

- as a result, correlated variables get importances which are too high

- propose permuting conditioned on the values of variables not being permuted

- conditional variable importance for random forests (strobl et al. 2008)

- Extracting Optimal Explanations for Ensemble Trees via Logical Reasoning (zhang et al. ‘21) - OptExplain: extracts global explanation of tree ensembles using logical reasoning, sampling, + optimization

- treeshap (lundberg, erion & lee, 2019): prediction-level

- individual feature attribution: want to decompose prediction into sum of attributions for each feature

- each thing can depend on all features

- Saabas method: basic thing for tree

- you get a pred at end

- count up change in value at each split for each variable

- three properties

- local acc - decomposition is exact

- missingness - features that are already missing are attributed no importance

- for missing feature, just (weighted) average nodes from each split

- consistency - if F(X) relies more on a certain feature j, $F_j(x)$ should

- however Sabaas method doesn’t change $F_j(X)$ for $F’(x) = F(x) + x_j$

- these 3 things iply we want shap values

- average increase in func value when selecting i (given all subsets of other features)

- for binary features with totally random splits, same as Saabas

- can cluster based on explanation similarity (fig 4)

- can quantitatively evaluate based on clustering of explanations

- their fig 8 - qualitatively can see how different features alter outpu

- gini importance is like weighting all of the orderings

- individual feature attribution: want to decompose prediction into sum of attributions for each feature

- Explainable AI for Trees: From Local Explanations to Global Understanding (lundberg et al. 2019)

- shap-interaction scores - distribute among pairwise interactions + local effects

- plot lots of local interactions together - helps detect trends

- propose doing shap directly on loss function (identify how features contribute to loss instead of prediction)

- can run supervised clustering (where SHAP score is the label) to get meaningful clusters

- alternatively, could do smth like CCA on the model output

- understanding variable importances in forests of randomized trees (louppe et al. 2013)

- consider fully randomized trees

- assume all categorical

- randomly pick feature at each depth, split on all possibilities

- also studied by biau 2012

- extreme case of random forest w/ binary vars?

- real trees are harder: correlated vars and stuff mask results of other vars lower down

- asymptotically, randomized trees might actually be better

- consider fully randomized trees

- Actionable Interpretability through Optimizable Counterfactual Explanations for Tree Ensembles (lucic et al. 2019)

neural nets (dnns)

dnn visualization

- good summary on distill

- visualize intermediate features

- visualize filters by layer - doesn’t really work past layer 1

- decoded filter - rafegas & vanrell 2016 - project filter weights into the image space - pooling layers make this harder

- deep visualization - yosinski 15

- Understanding Deep Image Representations by Inverting Them (mahendran & vedaldi 2014) - generate image given representation

- pruning for identifying critical data routing paths - prune net (while preserving prediction) to identify neurons which result in critical paths

- penalizing activations

- interpretable cnns (zhang et al. 2018) - penalize activations to make filters slightly more intepretable

- could also just use specific filters for specific classes…

- teaching compositionality to cnns - mask features by objects

- interpretable cnns (zhang et al. 2018) - penalize activations to make filters slightly more intepretable

- approaches based on maximal activation

- images that maximally activate a feature

- deconv nets - Zeiler & Fergus (2014) use deconvnets (zeiler et al. 2011) to map features back to pixel space

- given one image, get the activations (e.g. maxpool indices) and use these to get back to pixel space

- everything else does not depend on the original image

- might want to use optimization to generate image that makes optimal feature instead of picking from training set - before this, erhan et al. did this for unsupervised features - dosovitskiy et al 16 - train generative deconv net to create images from neuron activations - aubry & russel 15 do similar thing

- deep dream - reconstruct image from feature map

- could use natural image prior

- could train deconvolutional NN

- also called deep neuronal tuning - GD to find image that optimally excites filters

- deconv nets - Zeiler & Fergus (2014) use deconvnets (zeiler et al. 2011) to map features back to pixel space

- neuron feature - weighted average version of a set of maximum activation images that capture essential properties - rafegas_17

- can also define color selectivity index - angle between first PC of color distribution of NF and intensity axis of opponent color space

- class selectivity index - derived from classes of images that make NF

- saliency maps for each image / class

- simonyan et al 2014

- Diagnostic Visualization for Deep Neural Networks Using Stochastic Gradient Langevin Dynamics - sample deep dream images generated by gan

- images that maximally activate a feature

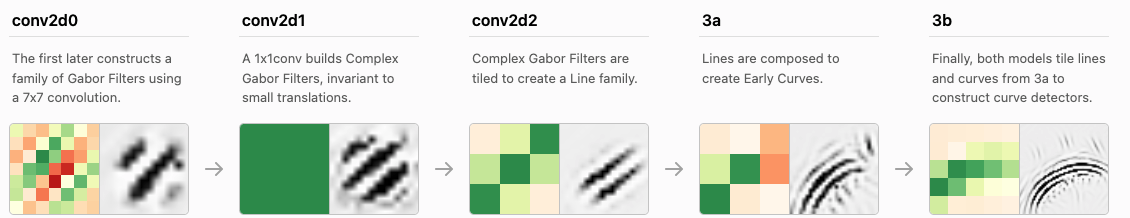

- Zoom In: An Introduction to Circuits (olah et al. 2020)

- study of inceptionV1 (GoogLeNet)

- some interesting neuron clusters: curve detectors, high-low freq detectors (useful for finding background)

- an overview of early vision (olah et al. 2020)

- many groups

- conv2d0: gabor, color-contrast, other

- conv2d1: low-freq, gabor-like, color contrast, multicolor, complex gabor, color, hatch, other

- conv2d2: color contrast, line, shifted line, textures, other, color center-surround, tiny curves, etc.

- many groups

- curve-detectors (cammarata et al. 2020)

- curve-circuits (cammarata et al. 2021)

- engineering curve circuit from scratch

- posthoc prototypes

- counterfactual explanations - like adversarial, counterfactual explanation describes smallest change to feature vals that changes the prediction to a predefined output

- maybe change fewest number of variables not their values

- counterfactual should be reasonable (have likely feature values)

- human-friendly

- usually multiple possible counterfactuals (Rashomon effect)

- can use optimization to generate counterfactual

- anchors - opposite of counterfactuals, once we have these other things won’t change the prediction

- prototypes (assumed to be data instances)

- prototype = data instance that is representative of lots of points

- criticism = data instances that is not well represented by the set of prototypes

- examples: k-medoids or MMD-critic

- selects prototypes that minimize the discrepancy between the data + prototype distributions

- counterfactual explanations - like adversarial, counterfactual explanation describes smallest change to feature vals that changes the prediction to a predefined output

- Architecture Disentanglement for Deep Neural Networks (hu et al. 2021) - “NAD learns to disentangle a pre-trained DNN into sub-architectures according to independent tasks”

- Explaining Deep Learning Models with Constrained Adversarial Examples

- Understanding Deep Architectures by Visual Summaries

- Semantics for Global and Local Interpretation of Deep Neural Networks

- Iterative augmentation of visual evidence for weakly-supervised lesion localization in deep interpretability frameworks

- explaining image classifiers by counterfactual generation

- generate changes (e.g. with GAN in-filling) and see if pred actually changes

- can search for smallest sufficient region and smallest destructive region

dnn concept-based explanations

- concept activation vectors

- Given: a user-defined set of examples for a concept (e.g., ‘striped’), and random

examples, labeled training-data examples for the studied class (zebras)

- given trained network

- TCAV can quantify the model’s sensitivity to the concept for that class. CAVs are learned by training a linear classifier to distinguish between the activations produced by a concept’s examples and examples in any layer

- CAV - vector orthogonal to the classification boundary

- TCAV uses the derivative of the CAV direction wrt input

- automated concept activation vectors - Given a set of concept discovery images, each image is segmented with different resolutions to find concepts that are captured best at different sizes. (b) After removing duplicate segments, each segment is resized tothe original input size resulting in a pool of resized segments of the discovery images. (c) Resized segments are mapped to a model’s activation space at a bottleneck layer. To discover the concepts associated with the target class, clustering with outlier removal is performed. (d) The output of our method is a set of discovered concepts for each class, sorted by their importance in prediction

- Given: a user-defined set of examples for a concept (e.g., ‘striped’), and random

examples, labeled training-data examples for the studied class (zebras)

- On Completeness-aware Concept-Based Explanations in Deep Neural Networks

- Interpretable Basis Decomposition for Visual Explanation (zhou et al. 2018) - decompose activations of the input image into semantically interpretable components pre-trained from a large concept corpus

- Explaining in Style: Training a GAN to explain a classifier in StyleSpace (lang et al. 2021)

dnn causal-motivated attribution

- Explaining The Behavior Of Black-Box Prediction Algorithms With Causal Learning - specify some interpretable features and learn a causal graph of how the classifier uses these features (sani et al. 2021)

- partial ancestral graph (PAG) (zhang 08) is a graphical representation which includes

- directed edges (X $\to$ Y means X is a causal ancestor of Y)

- bidirected edges (X $\leftrightarrow$ Y means X and Y are both caused by some unmeasured common factor(s), e.g., X ← U → Y )

- partially directed edges (X $\circ \to$ Y or X $\circ-\circ$ Y ) where the circle marks indicate ambiguity about whether the endpoints are arrows or tails

- PAGs may also include additional edge types to represent selection bias

- given a model’s predictions $\hat Y$ and some potential causes $Z$, learn a PAGE among them all

- assume $\hat Y$ is a causal non-ancestor of $Z$ (there is no directed path from $\hat Y$ into any element of $Z$)

- search for a PAG and not DAG bc $Z$ might not include all possibly relevant variables

- partial ancestral graph (PAG) (zhang 08) is a graphical representation which includes

- Neural Network Attributions: A Causal Perspective (Chattopadhyay et al. 2019)

- the neural network architecture is viewed as a Structural Causal Model, and a methodology to compute the causal effect of each feature on the output is presented

- CXPlain: Causal Explanations for Model Interpretation under Uncertainty (schwab & karlen, 2019)

- model-agnostic - efficiently query model to figure out which inputs are most important

- pixel-level attributions

- Amnesic Probing: Behavioral Explanation with Amnesic Counterfactuals (elezar…goldberg, 2020)

- instead of simple probing, generate counterfactuals in representations and see how final prediction changes

- remove a property (e.g. part of speech) from the repr. at a layer using Iterative Nullspace Projection (INLP) (Ravfogel et al., 2020)

- iteratively tries to predict the property linearly, then removes these directions

- remove a property (e.g. part of speech) from the repr. at a layer using Iterative Nullspace Projection (INLP) (Ravfogel et al., 2020)

- instead of simple probing, generate counterfactuals in representations and see how final prediction changes

- Bayesian Interpolants as Explanations for Neural Inferences (mcmillan 20)

- if $A \implies B$, interpolant $I$ satisfies $A\implies I$, $I \implies B$ and $I$ expressed only using variables common to $A$ and $B$

- here, $A$ is model input, $B$ is prediction, $I$ is activation of some hidden layer

-

Bayesian interpolant show $P(A B) \geq \alpha^2$ when $P(I A) \geq \alpha$ and $P(B I) \geq \alpha$

- if $A \implies B$, interpolant $I$ satisfies $A\implies I$, $I \implies B$ and $I$ expressed only using variables common to $A$ and $B$

dnn feature importance

- saliency maps

- occluding parts of the image - sweep over image and remove patches - which patch removals had highest impact on change in class?

- text usually uses attention maps

- ex. karpathy et al LSTMs

- ex. lei et al. - most relevant sentences in sentiment prediction

- attention is not explanation (jain & wallace, 2019)

- attention is not not explanation (wiegreffe & pinter, 2019)

- influence = pred with a word - pred with a word masked

- attention corresponds to this kind of influence

- deceptive attention - we can successfully train a model to make similar predictions but have different attention

- influence = pred with a word - pred with a word masked

- class-activation map (CAM) (zhou et al. 2016)

- sum the activations across channels (weighted by their weight for a particular class)

- weirdness: drop negative activations (can be okay if using relu), normalize to 0-1 range

- CALM (kim et al. 2021) - fix issues with normalization before by introducing a latent variable on the activations

- RISE (Petsiuk et al. 2018) - randomized input sampling

- randomly mask the images, get prediction

- saliency map = sum of masks weighted by the produced predictions

- gradient-based methods - visualize what in image would change class label

- gradient * input

- integrated gradients (sundararajan et al. 2017) - just sum up the gradients from some baseline to the image times the differ (in 1d, this is just $\int_{x’=baseline}^{x’=x} (x-x’) \cdot (f(x) - f(x’))$

- in higher dimensions, such as images, we pick the path to integrate by starting at some baseline (e.g. all zero) and then get gradients as we interpolate between the zero image and the real image

- if we picture 2 features, we can see that integrating the gradients will not just yield $f(x) - f(baseline)$, because each time we evaluate the gradient we change both features

- explanation distill article

- ex. any pixels which are same in original image and modified image will be given 0 importance

- lots of different possible choices for baseline (e.g. random Gaussian image, blurred image, random image from the training set)

- multiplying by $x-x’$ is strange, instead can multiply by distr. weight for $x’$

- could also average over distributions of baseline (this yields expected gradients)

- when we do a Gaussian distr., this is very similar to smoothgrad

- lrp

- taylor decomposition

- deeplift

- smoothgrad - average gradients around local perturbations of a point

- guided backpropagation - springenberg et al

- lets you better create maximally specific image

- selvaraju 17 - grad-CAM

- grad-cam++

- competitive gradients (gupta & arora 2019)

- Label “wins” a pixel if either (a) its map assigns that pixel a positive score higher than the scores assigned by every other label ora negative score lower than the scores assigned by every other label.

- final saliency map consists of scores assigned by the chosen label to each pixel it won, with the map containing a score 0 for any pixel it did not win.

- can be applied to any method which satisfies completeness (sum of pixel scores is exactly the logit value)

- Saliency Methods for Explaining Adversarial Attacks

- critiques

- Do Input Gradients Highlight Discriminative Features? (shah et a. 2021) - input gradients often don’t highlight relevant features (they work better for adv. robust models)

- prove/demonstrate this in synthetic dataset where $x=ye_i$ for standard basis vector $e_i$, $y={\pm 1}$

- Do Input Gradients Highlight Discriminative Features? (shah et a. 2021) - input gradients often don’t highlight relevant features (they work better for adv. robust models)

- newer methods

- Quantifying Attention Flow in Transformers (abnar & zuidema) - solves the problem where the attention of the previous layer is combined nonlinearly with the attention of the next layer by introducing attention rollout - combining attention maps and assuming linearity

- Score-CAM:Improved Visual Explanations Via Score-Weighted Class Activation Mapping

- Removing input features via a generative model to explain their attributions to classifier’s decisions

- NeuroMask: Explaining Predictions of Deep Neural Networks through Mask Learning

- Interpreting Undesirable Pixels for Image Classification on Black-Box Models

- Guideline-Based Additive Explanation for Computer-Aided Diagnosis of Lung Nodules

- Learning how to explain neural networks: PatternNet and PatternAttribution - still gradient-based

- Learning Reliable Visual Saliency for Model Explanations

- Neural Network Attributions: A Causal Perspective

- Gradient Weighted Superpixels for Interpretability in CNNs

- Decision Explanation and Feature Importance for Invertible Networks (mundhenk et al. 2019)

- Efficient Saliency Maps for Explainable AI

dnn textual explanations

- Adversarial Inference for Multi-Sentence Video Description - adversarial techniques during inference for a better multi-sentence video description

- Object Hallucination in Image Captioning - image relevance metric - asses rate of object hallucination

- CHAIR metric - what proportion of words generated are actually in the image according to gt sentences and object segmentations

- women also snowboard - force caption models to look at people when making gender-specific predictions

- Fooling Vision and Language Models Despite Localization and Attention Mechanism - can do adversarial attacks on captioning and VQA

- Grounding of Textual Phrases in Images by Reconstruction - given text and image provide a bounding box (supervised problem w/ attention)

- Natural Language Explanations of Classifier Behavior

interactions

model-agnostic interactions

How interactions are defined and summarized is a very difficult thing to specify. For example, interactions can change based on monotonic transformations of features (e.g. $y= a \cdot b$, $\log y = \log a + \log b$). Nevertheless, when one has a specific question it can make sense to pursue finding and understanding interactions.

- build-up = context-free, less faithful: score is contribution of only variable of interest ignoring other variables

- break-down = occlusion = context-dependent, more faithful: score is contribution of variable of interest given all other variables (e.g. permutation test - randomize var of interest from right distr.)

- H-statistic: 0 for no interaction, 1 for complete interaction

- how much of the variance of the output of the joint partial dependence is explained by the interaction instead of the individuals

- \[H^2_{jk} = \underbrace{\sum_i [\overbrace{PD(x_j^{(i)}, x_k^{(i)})}^{\text{interaction}} \overbrace{- PD(x_j^{(i)}) - PD(x_k^{(i)})}^{\text{individual}}]^2}_{\text{sum over data points}} \: / \: \underbrace{\sum_i [PD(x_j^{(i)}, x_k^{(i)})}_{\text{normalization}}]^2\]

- alternatively, using ANOVA decomp: $H_{jk}^2 = \sum_i g_{ij}^2 / \sum_i (\mathbb E [Y \vert X_i, X_j])^2$

- same assumptions as PDP: features need to be independent

- alternatives

- variable interaction networks (Hooker, 2004) - decompose pred into main effects + feature interactions

- PDP-based feature interaction (greenwell et al. 2018)

- feature-screening (feng ruan’s work)

- want to find beta which is positive when a variable is important

- idea: maximize difference between (distances for interclass) and (distances for intraclass)

- using an L1 distance yields better gradients than an L2 distance

- ANOVA - factorial method to detect feature interactions based on differences among group means in a dataset